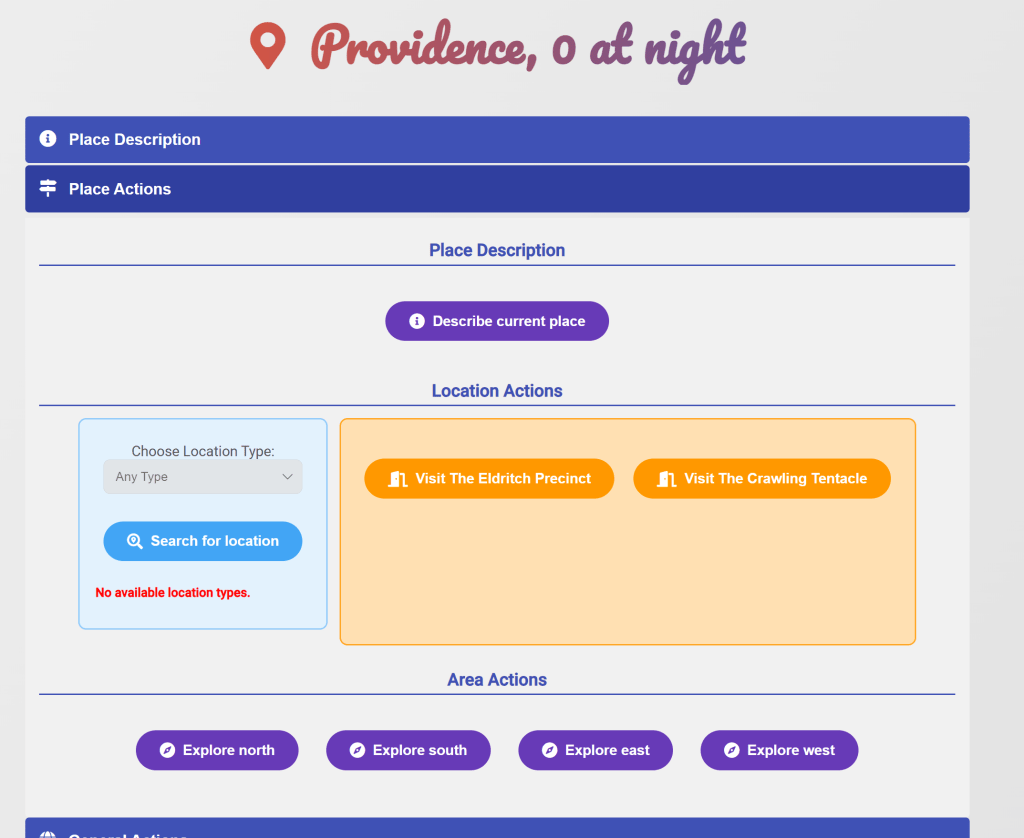

I recommend you to check out the previous parts if you don’t know what this “neural narratives” thing is about. In short, I wrote in Python a system to have multi-character conversations with large language models (like Llama 3.1), in which the characters are isolated in terms of memories and bios, so no leakage to other participants like in Mantella. Here’s the GitHub repo.

Without further preamble…

I approached one of the students.

Let’s head to the library, then.

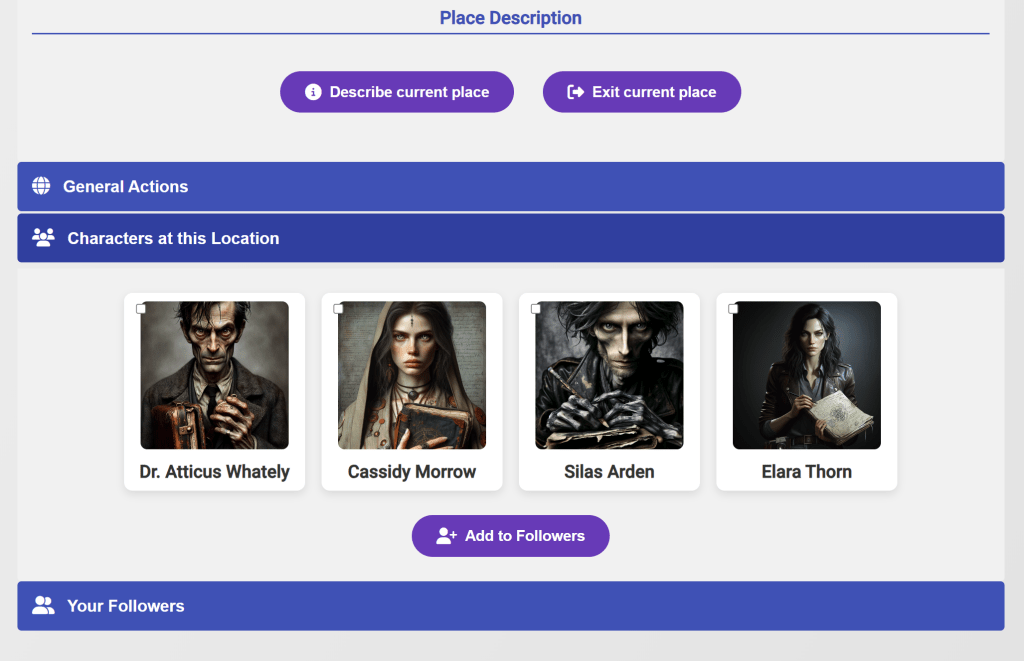

As I was having the big multi-char convo with Elara and two of her pals, as well as the other two people on my side, Elara treated her own companions as if she didn’t know them. Of course, she doesn’t actually know them: a character is only aware of that their bio says as well as the contents of their memories. Those people hadn’t been introduced in their memories yet. That’s an interesting problem to solve.

Of course, I could just make up their relationship by writing directly in their memories, but the whole point of having this app is for the large language model to act as a Dungeon Master. So, I’m going to create a whole new page on the site, one called Connections, that offers the opportunity to generate a relationship between two characters.

Here is the prompt I’ve put together so that the large language model will come up with a compelling, meaningful connection between two characters:

Instructions:

Using the character data provided for {name_a} and {name_b}, generate a meaningful and compelling connection between them. The connection should align with their personal information and memories. Ensure that the relationship is coherent, enriches their backstories, and is consistent with their individual characteristics. The connection does not need to be intimate or romantic; it can be any significant relationship such as friendship, rivalry, mentorship, familial ties, etc.

Character {name_a}:

{character_a_information}

Character {name_b}:

{character_b_information}

Your Task:

Using the above information, write a detailed description (approximately 3-5 sentences) of the connection between {name_a} and {name_b}. The description should:

Explain how they met or became connected.

Highlight the nature of their relationship (e.g., allies, rivals, mentor and protégé, siblings).

Incorporate elements from their personalities, backgrounds, likes, dislikes, secrets, or memories.

Be compelling and add depth to both characters.

Ensure that the connection is believable and enhances the narrative potential of their interactions.Well, pulling off that Connections page was far quicker than I anticipated, but the app is already quite mature when it comes to examples of how stuff works.

The connection between them (that gets stored as a memory for both characters) isn’t shown to the user, to keep the intrigue. You can, of course, check it out in the JSON files that store the characters, but for storytelling purposes, it’s better to keep the secrecy. Like all other forms I’ve been dealing with, this one interacts with the server via AJAX, without actually reloading the page. Very slick.

As I was checking out how the ongoing dialogue was getting stored, I realized that at some point I had mistakenly stored the characters’ names instead of their descriptions, so I don’t think that the characters were aware of how the others looked as they spoke. This fix will open up plenty of dialogue flavor.

The error was worse than I anticipated. Turns out that when I refactored the gigantic manager class CharactersManager into five or so other classes, one of them a class named Character that handled everything related to character attributes, I had copy-pasted my way into horror: I was returning the property “name” even if what the user demanded was the description, the likes, the dislikes, the personality, etc. So these last couple of days, most of the prompts involving characters, if not all, have been missing about half of the proper data. That’s why you pytest the shit out of your app. Sorry, programming gods.

Anyway, long dialogue incoming:

That’s all for now. The following isn’t related to anything, but I must say it: recently I recommended the anime adaptation of Uzumaki. Not once, but twice. Well, consider that recommendation rescinded. Those motherfuckers really pulled a fast one on us. Dandadan is still cool, though.

You must be logged in to post a comment.