I’ve recently implemented an emotions and expressions system in my app, which is a browser-based platform to play immersive sims, RPGs, adventure games, and the likes. If you didn’t know about the emotions and expressions system, you should check out the linked previous post for reference.

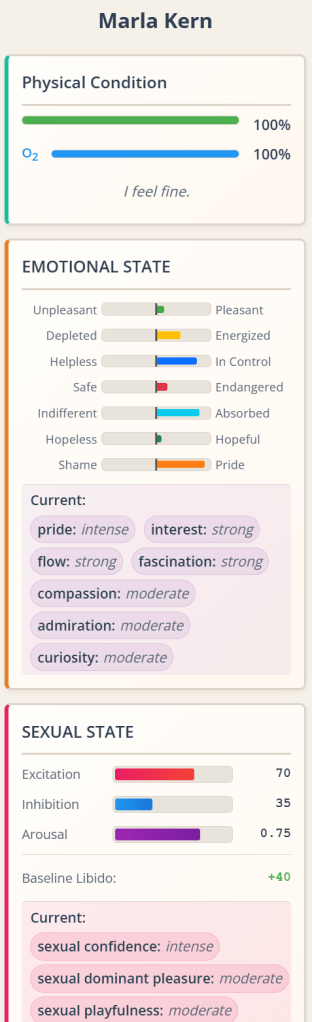

I was setting up a private scenario to further test the processing of emotions for characters during changing situations. This was the initial state of one Marla Kern:

Those current emotions look perfectly fine for the average person. There’s just one problem: Marla Kern is a sociopath. So that “compassion: moderate” would have wrecked everything, and triggered expressions (which are narrative beats) that would have her acting with compassion.

This is clearly a structural issue, and I needed to solve it in the most robust, psychologically realistic way possible, that at the same time strengthened the current system. I engaged in some deep research with my pal ChatGPT, and we came up with (well, mostly he did):

We lacked a trait dimension that captured stable empathic capacity. The current 7 mood axes are all fast-moving state/appraisal variables that swing with events. Callousness vs empathic concern is a stable personality trait that should modulate specific emotions. That meant creating a new component, named affect_traits, that the actors would need to have (defaults would be applied otherwise), and would include the following properties:

- Affective empathy: capacity to feel what others feel. Allows emotional resonance with others’ joy, pain, distress. (0=absent, 50=average, 100=hyper-empathic)

- Cognitive empathy: ability to understand others’ perspectives intellectually. Can be high even when affective empathy is low. (0=none, 50=average, 100=exceptional)

- Harm aversion: aversion to causing harm to others. Modulates guilt and inhibits cruelty. (0=enjoys harm, 50=normal aversion, 100=extreme aversion)

In addition, this issue revealed a basic problem with our represented mood axes, which are fast-moving moods: we lacked one for affiliation, whose definition is now “Social warmth and connectedness. Captures momentary interpersonal orientation. (-100=cold/detached/hostile, 0=neutral, +100=warm/connected/affiliative)”. We already had “engagement” as a mood axis, but that doesn’t necessarily encompass affiliation, so we had a genuine gap in our representation of realistic mood axes.

Emotions are cooked from prototypes. Given these changes, we now needed to update affected prototypes:

"compassion": {

"weights": {

"valence": 0.15,

"engagement": 0.70,

"threat": -0.35,

"agency_control": 0.10,

"affiliation": 0.40,

"affective_empathy": 0.80

},

"gates": [

"engagement >= 0.30",

"valence >= -0.20",

"valence <= 0.35",

"threat <= 0.50",

"affective_empathy >= 0.25"

]

}

"empathic_distress": {

"weights": {

"valence": -0.75,

"arousal": 0.60,

"engagement": 0.75,

"agency_control": -0.60,

"self_evaluation": -0.20,

"future_expectancy": -0.20,

"threat": 0.15,

"affective_empathy": 0.90

},

"gates": [

"engagement >= 0.35",

"valence <= -0.20",

"arousal >= 0.10",

"agency_control <= 0.10",

"affective_empathy >= 0.30"

]

}

"guilt": {

"weights": {

"self_evaluation": -0.6,

"valence": -0.4,

"agency_control": 0.2,

"engagement": 0.2,

"affective_empathy": 0.45,

"harm_aversion": 0.55

},

"gates": [

"self_evaluation <= -0.10",

"valence <= -0.10",

"affective_empathy >= 0.15"

]

}That fixes everything emotions-wise. A character with low affective empathy won’t feel much in terms of compassion despite the engagement, will feel even less empathic distress, and won’t suffer as much guilt.

This will cause me to review the prerequisites of the currently 76 implemented expressions, which are as complex as the following summary for a “flat reminiscence” narrative beat:

“Flat reminiscence triggers when the character is low-energy and mildly disengaged, with a notably bleak sense of the future, and a “flat negative” tone like apathy/numbness/disappointment—but without the emotional bite of lonely yearning. It also refuses to trigger if stronger neighboring states would better explain the moment (nostalgia pulling warmly, grief hitting hard, despair bottoming out, panic/terror/alarm spiking, or anger/rage activating). Finally, it only fires when there’s a noticeable recent drop in engagement or future expectancy (or a clean crossing into disengagement), which prevents the beat from repeating every turn once the mood is already flat.”

That is all modeled mathematically, not by a large language model. In addition, I’ve created an extremely-robust analysis system using static analysis, Monte Carlo simulation, and witness state generation to determine how feasible any given set of prerequisites is. I’ll make a video about that in the future.

Pingback: Living Narrative Engine #18 – The Domains of the Emperor Owl