I recommend you to check out the previous parts if you don’t know what this “neural narratives” thing is about. In short, I wrote in Python a system to have multi-character conversations with large language models (like Llama 3.1), in which the characters are isolated in terms of memories and bios, so no leakage to other participants like in Mantella. Here’s the GitHub repo.

The last entry ended when I had managed to add a hole-in-the-wall to the ongoing story. Let’s enter it and allow the player character to describe it.

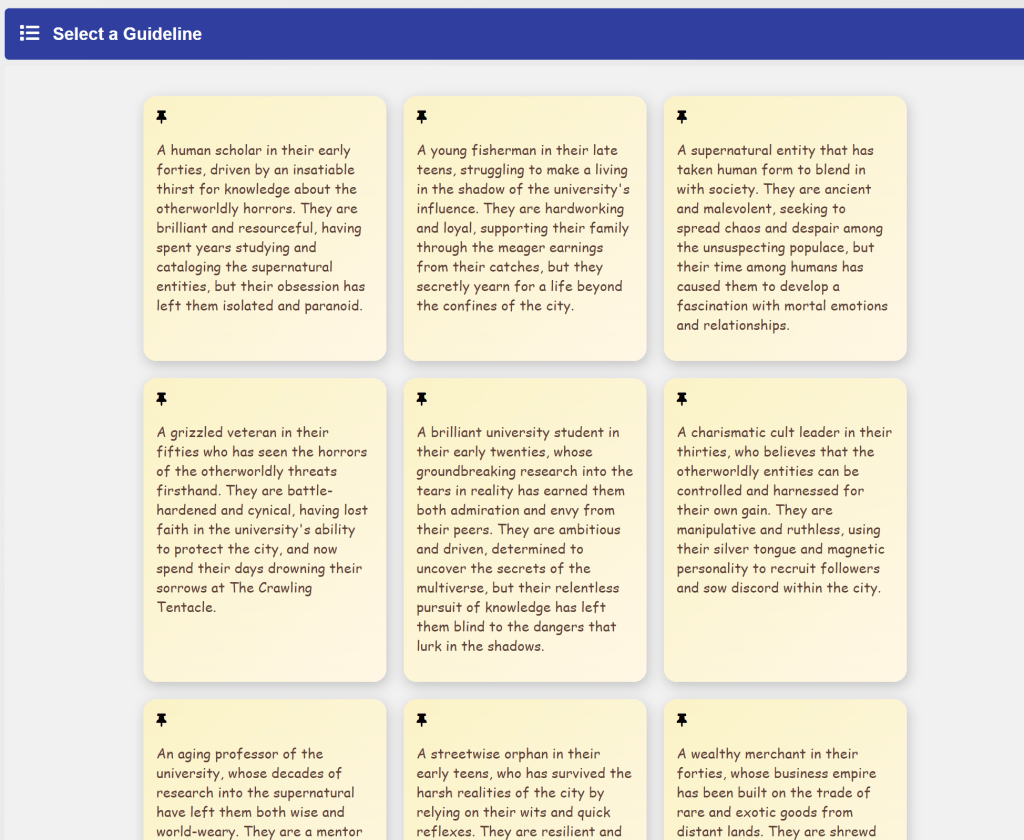

Every time you enter a new location, the app checks if there is a list of character generation guidelines already created for this combination of places, and if there isn’t, it generates that list. After a short while, the app produced the following:

That one about a brilliant university student sounds like a great contrast to the existing characters. I pressed the button that generates a new character, and at the end of that process, the app presented me with the following portrait:

That looks real good. Great job on the fingers, AI. However, the large language model named this character Elara Thorn, the exact name that was generated for a character in another one of my trial-run stories with this system. Perhaps at some point I’ll need to program a way of reading names from a gigantic list, then presenting the AI with about twenty random ones to choose from.

Anyway, let’s have a multi-char convo.

After such a tense conversation, I figured that the player character would reflect on it, as well as his general circumstances. I happened to have implemented a system for self-reflection: the large language model looks at the character’s memories, then writes a sort of journal entry from the first-person perspective. It gets saved along with the rest of the memories. That helps color the dialogue and in general make the character sound more intelligent. Of course, now I generate audio files of those self-reflections as well.

My hardened player character returned home. I’ll let him describe his living arrangements.

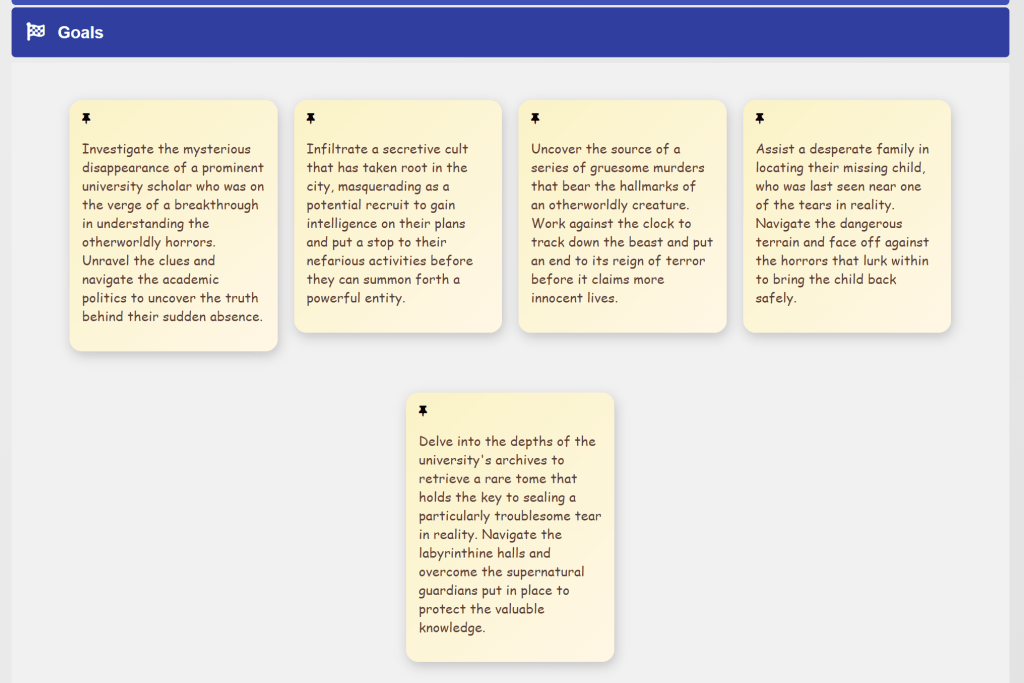

I can imagine the player character returning to work the following day, only to be introduced to some troubled citizen who will present him with a case. However, I have also programmed a way of generating story concepts, interesting situations, interesting dilemmas, and interesting goals, in case the user isn’t too sure how to continue. Let’s generate a few.

Here are a few intriguing concepts the app has generated. When crafting any of these notions, the large language model is presented with the player’s information and that of his followers, and also all the available information about his location (world, region, area, and possibly location).

I should probably turn those post-its into something else, like papers or something, because such long texts look funky in a vertical format. That’s a minor issue in any case.

Investigating a series of gruesome murders connected to the otherworldly horrors sounds good for a story, and even more if the encounter with the arrogant student earlier does hint at a larger plot involving a secret society of reckless scholars. I also like the notion of a neighbor calling on his door to ask for his help because the person’s daughter has gone missing. Also, the sort of post-apocalyptic story of the grizzled detective leading a ragtag group as they fight against the pouring eldritch horrors sounds pretty fucking dope.

How about general goals?

Some of those are interesting. The disappeared scholar could easily be the aforementioned college girl, so how would the detectives feel about investigating her disappearance? The goal about infiltrating a cult makes me think that I would need a way of altering a character’s description so they can pass undercover, because other characters are fed the participants’ description during a conversation. And again, someone is requesting the detective’s help to find their missing child.

Pingback: Neural narratives in Python #7 – The Domains of the Emperor Owl

Pingback: Neural narratives in Python #9 – The Domains of the Emperor Owl