I recommend you to check out the previous parts if you don’t know what this “neural narratives” thing is about. In short, I wrote in Python a system to have multi-character conversations with large language models (like Llama 3.1), in which the characters are isolated in terms of memories and bios, so no leakage to other participants like in Mantella. Here’s the GitHub repo.

Last time, I managed to nail creating voice lines for the dialogues of my app, relying on a RunPod server dedicated to generating audio. Now I want to go on a serious test run of app to see what it lacks.

Starting from scratch, I created a new world, a new region of that world, a new area of that region, and a new location of that area. I won’t detail the specifics, because the app itself should do it like a story would do, so if in the course of testing the system I feel that something must be implemented to cover for the shortcomings, I will do so.

The process of creating a new playthrough requires you to provide some notion of the character you want to play as. I ended up with a good depiction of the guy.

I have the initial setting and the player character (including his automatically assigned voice model). A story needs other characters, so I went to the section that shows the character creation guidelines that have been generated for this combination of places.

I turned them into post-its. Anyway, my protagonist is a police detective, so he could do with a partner. I grabbed the second guideline and made her a woman.

The app doesn’t allow you to access most of the specific data of a character except in very paricular circumstances, so in general, you have to glean the specifics of a character from their looks and the conversations you have with them.

In a story, you need some sense of where you are. There’s a system in place for the LLM to generate a description from the first-person perspective of the player character, and at this point it’s trivial to generate a voice line for it:

Let’s interact with the sole other character around (for now). As I was running a conversation with the protagonist’s partner, I ran across the first issue: when the app had to generate a voice line for a bit of ambient text, the server (the RunPod pod) returned a 404. I guess that even if a pod is technically running, it could intermittently produce 404 errors for whatever reason. I guess I’ll need to program in some retry system to cover these cases.

I did do that. Let’s continue.

The “stop your a coffee” was my blunder. Old, stupid fingers.

The couple of grizzled detectives exited the police station, back to the grim city surrounding it.

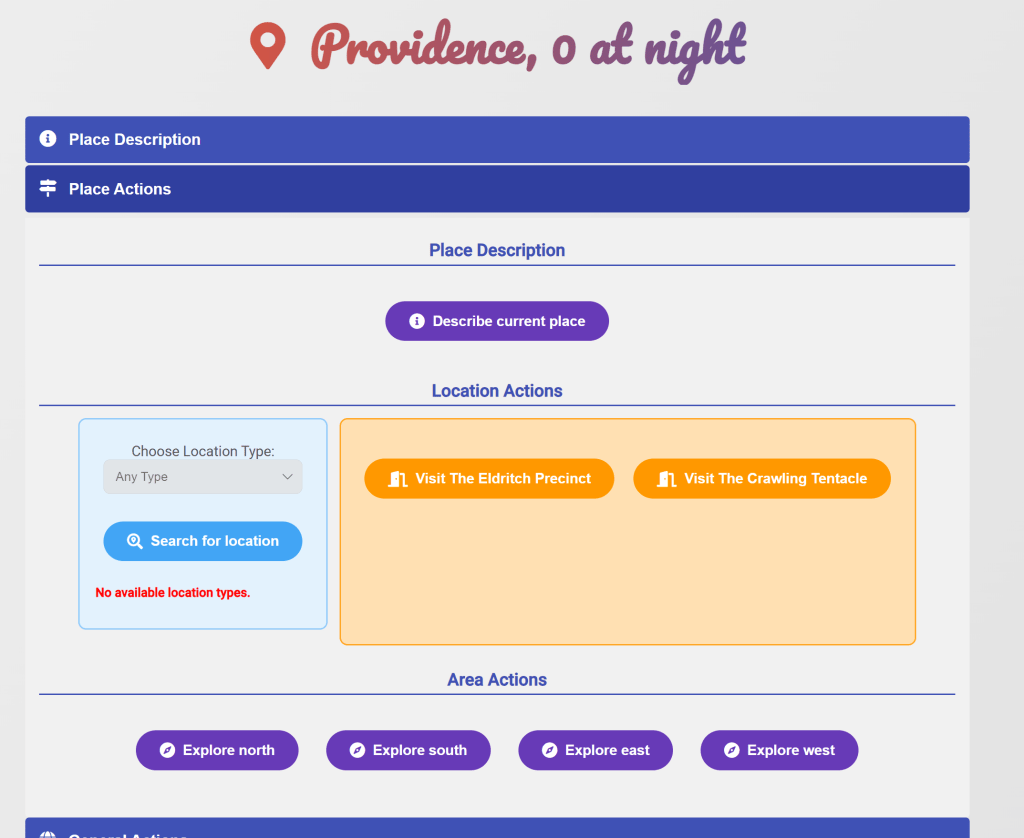

Now I want my characters to move to the mentioned location, some bar. Even though I didn’t create any other locations for this run other than the police station, there is a lingering issue with the interface: when plenty of possible locations exist, if you press the button “Search for location,” it may link locations you don’t want (like a cave, a hospital, etc.). Now that I was looking specifically for a bar, I figured that I may as well fix this issue.

It took quite a while, but now the user can only search locations by a type. In fact, if no locations are available, because they have already been used or they don’t match the area’s categories (you don’t want a fantasy bar in a cosmic horror story), the select and the button will be disabled.

Well, that was all for today. I expected to do more, but reworking that interface was arduous.

Pingback: Neural narratives in Python #6 – The Domains of the Emperor Owl

Pingback: Neural narratives in Python #8 – The Domains of the Emperor Owl